In 1936, Alan Turing published his paper to solve the decision problem. The paper started the computer science discipline. In this article, we discuss the roots of the decision problem, from number theory and mathematical formalism. We then discuss how cryptanalysis prompted the creation of the first computers and how artificial intelligence has evolved since.

Number Theory in Ancient Alexandria and Beyond

In 300 BCE, on the coast of Alexandria, Euclid wrote his Elements. The mathematical treatise consists of hundreds of geometric proofs, all based on just five postulates. Apart from geometry, Euclid was fascinated by prime numbers, so he proved their infinitude. He also discovered an algorithm that could be used to find the greatest common divisor between two integers and provided the fundamental theorem of arithmetic which shows how all numbers are composed of only primes.

Five hundred years later, in the same metropolitan city, Diophantus mourned the death of his son. To help cope with his sorrow and despair, Diophantus collected many algebraic problems in his book, Arithmetica. The text contains a class of equations that later became known as Diophantine equations, the solutions of which can only be integers.

Fast forward to the 17th century. In the margins of his copy of Arithmetica, Pierre de Fermat, a French lawyer, recorded his theorems, most significantly his last theorem, a true conjecture since he never provided any proofs. The conjuncture was finally proved 358 years later by Sir Andrew Wiles in 1995.

Fermat's Last Theorem (FLT): This diophantine equation has no solution for n > 2 for integers.

In his proof, Wiles used elliptic curves [1], which, much like the rest of number theory, play a fundamental role in cryptography, the study of securing communication, which will be discussed later in the article.

Foundational Crisis of Mathematics

In 1873, Georg Cantor, a German mathematician, created set theory, introduced transcendental numbers, like π and e, and the diagonalization proof of the uncountability of real numbers. He showed that some infinities are bigger than others and that some are enumerable while others are not.

Although Cantor’s ideas were opposed in the beginning due to philosophical differences between him and his opponents, his theory became widely accepted with time. Set theory became the foundation for many different topics in mathematics since every mathematical object could be reduced to a set, even the definition of natural numbers.

In 1884, Gottlob Frege published The Foundation of Arithmetic [2], introducing predicate calculus, a useful set of logical rules widely used in mathematics, philosophy, and linguistics. He also relied on set theory as his foundation of mathematics.

Between 1910 and 1913, Bertrand Russell picked up Frege’s work. While writing Principia Mathematica, a three-volume work on the foundations of mathematics, he ran into an inconsistency, causing him to correspond with Frege to find a resolution.

This inconsistency came later to be known as Russell’s Paradox:

Let R be the set of all sets that are not members of themselves. If R is not a member of itself, then its definition entails that it is a member of itself; if it is a member of itself, then it is not a member of itself, since it is the set of all sets that are not members of themselves.

Although Frege viewed his life’s work as a failure, Russell ended up furthering Frege’s work on mathematical logistics after his death.

Hilbert’s Program and Gödel’s Incompleteness

The collapse of set theory as the theoretical base of mathematics initiated the holy grail search for a consistent foundation for the field. David Hilbert, one of the brightest mathematicians of the 19th century, sought completeness, consistency, and decidability, i.e., the existence of an effective method for deriving the correct answer.

Hilbert’s Program is a generalization of the second problem (out of 23) that Hilbert posed at the international congress of mathematicians in 1900. In an attempt to resolve all the flaws in any mathematical system, Hilbert proposed a program that simplifies all that we know about mathematics into a set of finite postulates whose consistency could be proved.

However, in 1931, Kurt Gödel, after reading the first volume of Principia Mathematica, proved through his incompleteness theorems that a finite mathematical system based on a countable number of postulates could never be consistent.

The most comprehensive current formal systems are the system of Principia Mathematica (PM) on the one hand, the Zermelo-Fraenkelian axiom-system of set theory on the other hand. These two systems are so far developed that you can formalize in them all proof methods that are currently in use in mathematics, i.e. you can reduce these proof methods to a few axioms and deduction rules. Therefore, the conclusion seems plausible that these deduction rules are sufficient to decide all mathematical questions expressible in those systems. We will show that this is not true.

Kurt Gödel in On formally undecidable propositions of Principia Mathematica and related systems I (1931)

Gödel killed Hilbert’s dream of consistency and completeness, as the first incompleteness theorem showed that if a set of axioms is consistent, then it is incomplete, while the second theorem stated that no consistent theory can ever prove its own consistency.

However, the decidability dream lived on for a few more years until Turing and Church worked out the solution.

Turing’s Paper: On Computable Numbers

At the congress of mathematicians in 1900, Hilbert also stated his tenth problem, the answer to which is a procedure to determine if a Diophantine equation is solvable or not. A problem that combines both number theory and mathematical logic, the problem doesn’t ask for a solution: instead, the “solution” is determining whether or not the problem itself is solvable.

In 1928, David Hilbert and Wilhelm Ackermann proposed the Entscheidungsproblem (the decision problem). A generalization of the tenth problem, it asks for an effective procedure that returns if a proposition can be proved from a set of postulates.

In 1936, in Cambridge, Turing attempted Hilbert’s Entscheidungsproblem problem. He proved for the general case that no mechanism exists to determine decidability. In his proof, Turing constructed a universal (Turing) machine which is, to this day, the most general mathematical model for computing. In other words, a Turing machine is the upper limit of what any computer can possibly achieve.

In Princeton, Alonzo Church published the same results a few months earlier using lambda calculus [Appendix], a formal system in mathematical logic that expresses computation based on abstraction, binding, and substitution. Alan Turing proved that lambda calculus is equivalent to Turing’s machine, i.e., it can compute everything that a Turing machine can [3]. This equivalence became known later as Turing Completeness, a classification of computers, operating systems, and programming languages. Its significance lies in quantifying the computational diversity a system is capable of.

In 1970, Matiyasevich finally solved Hilbert’s tenth problem based on work from Julia Robinson, Martin Davis, and Hilary Putnam.

Everything is a Turing Machine

In his seminal paper, Alan Turing introduced a new set: computable numbers, i.e., numbers that could be produced with any desired precision by some finite procedure. It contains all algebraic numbers and some transcendental numbers. This idea is similar to Gödel numbering, the function that Gödel used in his incompleteness theorems, which represents numbers as symbols using some encoding. Then it translates sequences of symbols to numbers once again that could be manipulated using rules of mathematics.

An irrational number has infinite decimal points, or in layman’s terms, it would take an endless amount of paper to write down all the digits. Take π, for example; it can be calculated using a Monte Carlo simulation shown in the appendix.

The program to write this simulation consists of specific instructions that could be encoded using numbers (Binary, ASCII, Unicode). Concatenating these instructions, similarly, their numbers produce a unique finite number that represents the program. This turns π into a computable number, making the infinite finite.

Yet numbers are not the only thing that can be represented using a Turing machine. Mathematical proofs, games, and any general program are all, functionally speaking, Turing machines hidden in plain sight. Any effective procedure, a method for solving a problem in a finite amount of time, is a Turing machine.

Now that theory of computation had been established; computers were well on their way to being built.

Building the First Digital Computer

World War II was a ghastly conflict that left tens of millions of deaths and much destruction. Before El Alamein, Sicily, and Normandy, the Allies were losing the war. As the French were already under the Vichy regime, it was only a matter of time before the BBC would air its radio shows in German.

The MI6 was keen on eavesdropping on the Axis Powers’ communication to win the war. Although the English were able to retrieve a few Enigma machines, the German military cipher device, from the battlefield, they couldn’t use the machines to decipher the encrypted communications. It prompted the employment of Alan Turing to break the German cipher at Bletchley Park.

After a few manual attempts, Turing realized that breaking the code by hand was impossible. He needed to build a machine. Out of that necessity, the Bombe was born. Neither a generic computer nor a digital one, the Bombe was nonetheless able to decipher the code, which is estimated to have shortened the war by two to four years.

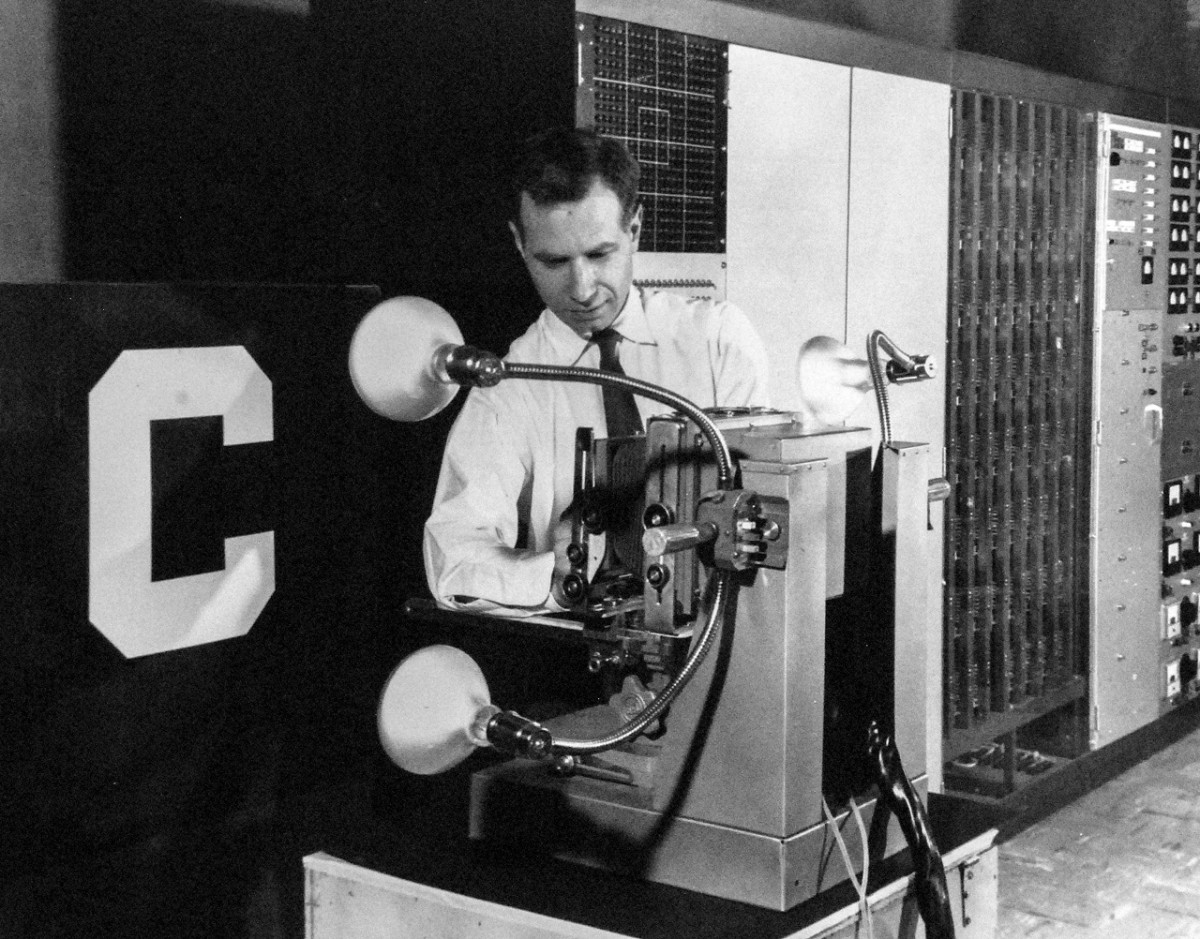

Turing’s cryptanalysis process inspired the deciphering of code generated by the lesser-known, more complicated cryptography machine, the Lorenz cipher [4], which was used in the highest levels of the Nazi party within Hitler’s closest circle. The Lorenz had 2^501 (equivalently 6.5 x 10^150) wheel patterns to generate a single letter, making breaking any code by hand out of the question. The Colossus, the world’s first programmable electronic digital computer, was built to brute force the combination.

Digital Computers: The Princeton Architecture

At Princeton, John von Neumann read Turing’s paper and subsequently engineered a digital computer with a separate arithmetic-logic unit (ALU), central processing unit (CPU), and memory, all inspired by the Turing machine. This new design came to be known as the Princeton Architecture (Von Neumann architecture).

Many digital computers soon followed. In 1945, Electronic Numerical Integrator and Computer (ENIAC) was created for a Ballistic Research Laboratory. It was a general-purpose, programmable digital computer. In 1949, the Electronic Delay Storage Automatic Calculator (EDSAC) was designed in the Mathematical Laboratory in Cambridge. The Manchester Mark I (Manchester baby) became operational in 1949 by running a program to search for Mersenne primes.

In addition to cryptanalysis, some of the first applications of computers were automated theorem proving, games, and of course, gameplay which would soon give rise to artificial intelligence.

Man-Machine Duality

The Chinese room argument [5] posits that a program can never have a mind or a consciousness, regardless of how intelligent it might be. A computer is merely programmed to respond to specific inputs using the instructions of the program.

In 1950, Alan Turing published Computing Machinery and Intelligence in Mind, a journal on psychology and philosophy. He started his paper with an intentionally vague question: “Can machines think?” before concluding the first section with the imitation game [Appendix], the same name as the film that would eventually become a pop culture phenomenon and box office hit [6]. Turing attempted to escape philosophical definitions of the word think and judge the effectiveness of machinery using only one criterion: what humans can achieve.

Making a few references to the Manchester Mark, Turing perceived all problems as computational ones that could be modeled as discrete state machines and solved by computers, so long as they were provided with enough memory and time. To that end, Turing viewed the imitation game as only a computational problem [7].

In 1951, using the Manchester Mark, Christopher Strachey wrote the first game agent to play checkers, while Dietrich Prinz wrote one for chess. In 1954, a computer translated sixty sentences from Russian to English in the Georgetown- IBM experiment.

In 1955, John McCarthy [8] created the Dartmouth Summer Research Project on Artificial Intelligence. Researchers, including Claude Shannon, Herbert Simon, and John Nash, for six weeks, came together to discuss automated reasoning ideas. The researchers wrote a program to prove 38 out of the first 52 theorems in the Principia Mathematica. Many researchers regard Dartmouth Workshop as the birth of Artificial Intelligence as a discipline.

In 1957, Frank Rosenblatt, a psychologist, created the Perceptron, an electronic device that mimics the biological learning process in the central nervous system. This Perceptron would evolve in the early 2010s and later become a system of powerful neural networks that rely on powerful computations and massive datasets; both were missing in the 1950s.

.

.

.

Most recently, however, in the past decade, breakthroughs in reinforcement learning [9] have fueled intelligent machines to beat the world’s human champions at chess, Shogi, and Go, in addition to playing and excelling at human-level games like Atari and League of Legends [10].

But apart from playing games, a fully automated life with robotics all around, doing all the manual work still seems out of reach.

Moravec’s Paradox

In 1950, in tune with post-war’s optimism and confidence, Issac Asimov proposed the three laws of robotics [Appendix] to ensure the safety of humans, the servitude, and the survival of robots.

In 1956, Herbert Simons said: “Machines will be capable, within twenty years, of doing any work a man can do.” Researchers predicted that computers would render people obsolete in a matter of decades, yet seventy years later, this prophecy still feels far-fetched.

In 1988, Hans Moravec wrote: “It is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility.”

The irony captured by this comparison came to be known as Moravec’s paradox. Over thirty years later, we’re not much closer to cracking the code that would give computers a human-like perception. Although computers today can have conversations with people, generate faces, and play video games, they still lack any subjective perception, cognition, sensory, or motor skills.

To understand the perception of artificially intelligent agents, recent attempts to analyze the success of artificial neural networks have used the framework of the British neuroscientist David Marr.

Marr regarded visual processing as an information processing system that he broke down into levels of analysis consisting of computational, algorithmic, and implementational/physical. The computational level of analysis asks what a system does, while the algorithmic level of analysis asks how the system does it. The physical level of analysis asks how the system represents the entities that it uses.

Understanding how agents learn is the key to intelligence, and Marr provided a framework for how to do it. If we understand smaller pieces of the puzzle, like backpropagation and data efficiency, we might eventually be able to rely on machinery for most of our chores. This will have enormous implications for our psychological well-being and our lives in general because, with the absence of work or employment of some kind, we’ll have to find other avenues to give our lives purpose and meaning, which Keynes highlighted in his paper about the economic possibilities [11].

The search for a mathematical foundation has inspired the conceptualization of Turing machines, a framework for computation. Cryptanalysis led to the construction of physical machinery based on the architecture inspired by Turing.

After the creation of computers, mathematics and machinery remained intertwined. Scientists and mathematicians attempted to utilize computers in automated theorem proving, cryptography, and artificial intelligence. Alan Turing explored the man-machine duality further as he shifted his research focus from abstract philosophical questions about human thought to a concrete test by which he could evaluate the effectiveness of these new thinking machines.

Recent advances in artificial intelligence have made strides in developing algorithms for more complex tasks like human conversation, deepfakes, and gameplay. Nonetheless, other domains like robotics, perception, and cognition continue to prove elusive, and the possibility of artificial intelligence surpassing human intelligence continues to appear far-fetched.

Claude Shannon, the founder of information theory, aspired to a better future when he wrote, “I visualize a time when we will be to robots what dogs are to humans, and I'm rooting for the machines.” While Alan Turing grounded us in reality when he wrote, “We can only see a short distance ahead, but we can see plenty there that needs to be done.”

Appendix

- How elliptic curves are used in cryptography.

- Reference on Frege’s work.

- Here’s Gabriel Lebec’s showing the equivalence of lambda calculus to a Turing machine.

- James Grime explains how the Lorenze machine works.

- A video on the Chinese room thought experiment.

- Turing refrained from investigating his imitation game using the theory of games introduced by John von Neumann and Oskar Morgenstern in the 1940s. Earlier that year, in 1950, John Nash proved the existence of an equilibrium (Nash Equilibrium) in n-persons games.

- In his 1936 paper, Turing revealed the equivalence of his machinery to a human computer. In his 1950 paper, Turing explored this duality and furthered it more extensively.

- “When there’s a will to fail, obstacles can be found.” — John McCarthy.

- Reinforcement learning (RL) (Sutton, Barto) provides a framework for agents to learn where the agent models the environment as a Markov decision process and tries to maximize the cumulative reward. In the last decade, RL was combined with artificial neural networks for state-action approximation, Monte-Carlo tree search (Chaslot et al.) for state enumeration reduction, and self-play to automate training.

- Artificial intelligent (AI) agents deploying reinforcement learning have achieved human-level control to play Atari games (Minh et al.). They have beaten the world’s champion in Go (Silver et al.) and have mastered chess, Go, shogi, and Atari games without being fed the rules of the games (Schrittwieser et al.).

- Economic Possibilities for our Grandchildren

Appendix: Lambda Calculus

- Lambda Calculus has no global state (which is the tape in a turning machine).

- All functions are pure; they always return the same output for the same input.

- All values are immutable.

- No loops exist; recursion is used instead.

- Functions are your unit of composition; it’s easier to reason about.

- Thinking of the halting program is also helpful since Turing has elegantly proved that no program can determine if another program is halting. This opens our minds to the world of timeouts.

Appendix: Monte Carlo Simulation

const zero = 0;

const one = 1;

const two = 2;

const thousand = 1000;

var Point = class {

constructor(x, y)

{

this.x = x;

this.y = y;

}

toString() {

return `({this.x}, {this.y})`;

}

};

function generate_2d_point(xstart, xrange, ystart, yrange) {

return () => new Point(Math.random() * xrange + xstart, Math.random() * yrange + ystart);

}

function check_inside_circle(center, radius, point) {

if ((point.x - center.x) ** 2 + (point.y - center.y) ** 2 < radius ** 2) {

return one;

}

return zero;

}

function run(sample_number, probability_distribution, estimator_function) {

let miu = 0;

for (let i = 0; i < sample_number; i++) {

if (estimator_function(probability_distribution())) {

miu = miu + 1

}

}

return miu / sample_number;

}

function calculate_pi() {

let sample_number = thousand * thousand;

let radius = one;

let center = new Point(zero, zero);

let area_of_square = two * two;

let generator = generate_2d_point(-one, two, -one, two);

let estimator_function = (point) => check_inside_circle(center, radius, point);

return run(sample_number, generator, estimator_function) * area_of_square;

}

console.log(calculate_pi());This is a verbose javascript implementation of the π generation using a Monte Carlo simulation. It inscribes a circle inside of a square. As grains of raindrops fall on the area of the square, the ratio between grains inside and outside of the circle leads to the calculation of pi with the desired precision based on the number of grains. This code is hosted on GitHub gists under the author’s account: shehio.

Appendix: The Imitation Game

The new form of the problem can be described in terms of a game which we call the 'imitation game." It is played with three people, a man (A), a woman (B), and an interrogator (C) who may be of either sex. The interrogator stays in a room apart front the other two. The object of the game for the interrogator is to determine which of the other two is the man and which is the woman. He knows them by labels X and Y, and at the end of the game he says either "X is A and Y is B" or "X is B and Y is A." The interrogator is allowed to put questions to A and B thus:

C: Will X please tell me the length of his or her hair?

Now suppose X is actually A, then A must answer. It is A's object in the game to try and cause C to make the wrong identification. His answer might therefore be:

"My hair is shingled, and the longest strands are about nine inches long."

In order that tones of voice may not help the interrogator the answers should be written, or better still, typewritten. The ideal arrangement is to have a teleprinter communicating between the two rooms. Alternatively the question and answers can be repeated by an intermediary. The object of the game for the third player (B) is to help the interrogator. The best strategy for her is probably to give truthful answers. She can add such things as "I am the woman, don't listen to him!" to her answers, but it will avail nothing as the man can make similar remarks.

We now ask the question, "What will happen when a machine takes the part of A in this game?" Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman? These questions replace our original, "Can machines think?"

Alan Turing in Computing Machinery and Intelligence

Appendix: Asimov’s Laws

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.